Motivation

I've written a few home/business security related apps that can monitor your residence or business while you are away. One of them includes a user interface with eyes that follow the motion of objects that pass near it. Given a stable light level, the problem of detecting and tracking motion is fairly easy, but detecting and tracking objects in an environment in which there is automatic light level adjustment (like with the iOS camera) is fairly difficult. This blog post looks at four ways of approaching the problem. The two screen shots below are images from one of my apps on an iPad, showing the eyes tracking something (in this case, me moving by the iPad).

You can also look at OpenCV, which will save you a lot of time in terms of writing code. I tried it and it seemed a bit slow on an iOS device for the real time processing I am trying to do, but it is a mature and very capable open source project and is definitely worth looking at prior to coding your own solution.

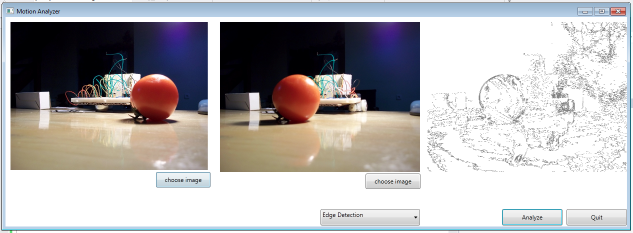

I've written an application in C# that I've taken screenshots from for the discussion. It's quite rough and the commenting almost non-existent. You can do what you want with the code, but I don't take any liability for your use or mis-use of the code. The zipped project file was developed using Visual Studio Express 12.

First Algorithm - Changed PixelsThe first problem I tried to solve was motion detection rather than motion tracking, and later adapted the same code to motion tracking, with fair results. The approach was to look for pixels which changed luminosity more than a certain cutoff. The major problem with this is that pixels change both where an object has been as well as where it is going. If an image is processed quickly, you are basically seeing the leading edge of the object and the trailing edge, as the background is obscured and the background is revealed. So, quick processing and a slow moving object give good results. For this and the subsequent screenshots I placed a tomato on my desk and moved it taking photos of the tomato in two locations to see how the various algorithms handled motion.

![]()

The biggest issue with doing this on an iOS device is that the light level adjustment frequently is triggered, making the majority of pixels appear to change. One can detect and discard frames with large shifts in light level, but this leaves the problem of false negatives for fast moving objects (which blur and leave trails) and objects that pass near the camera. This would not be acceptable for security applications.

Second Algorithm - Moving EdgesA second method is to look for new edges in an image. This works well for when an "edgy" object, such as a person, walks in front of an "edge-less" background, such as a single color wall. This also helps to avoid some of the problems with automatic light level adjustments. Basically, the algorithm ignores any edges that have disappeared and looks for new edges. So when an object moves against a plain background, the new edges are where ever the object now appears. This and the next two algorithms also deal with minor camera jitter or vibration better than looking at changed pixels, as they are tracking edges rather than changed pixels. This algorithm is used in EyesBot Watcher, and it, along with the automatic light level adjustment, causes a saccade like motion of the EyesBot Watcher Eyes. Using this algorithm, the motion of the object looks like:

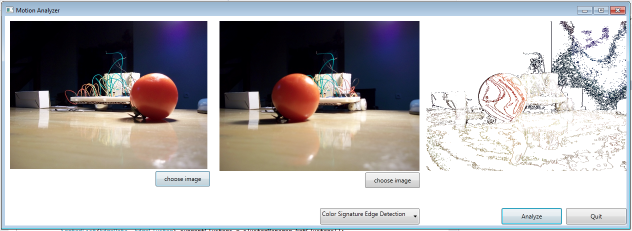

To avoid the problem of newly exposed edges appearing to be moving edges, color signatures can be used on edges that have moved. This looks for a pre/post-edge color match for edges in the first and second frame, so that the actual edges of a given object can be matched up in the old and new frame. This works well as long as there is a single object in the frames that has a given color signature. In both the supplied sets of photos there are lots of edges, so this and the previous algorithm don't work very well.

The problem with the third algorithm is that all edges of a given color are treated the same. So if there were two oranges and each moved, the resulting motion tracking would be intermediate between the two differences in location. The solution is to treat each set of spatially related edges as an object and track its motion separately. If there were two orange in the frames and they moved relative to each other, the set of related edges that matched most closely in terms of signature and location would then track the motion of each orange. I'm now working on this, but I will probably get back to improving my iOS apps rather that work on this in C# in the near future, the code is open-sourced, so if you want to work on it, please feel free.

Take AwayIt was useful to go through this exercise, since it appears that the fastest and most accurate way is to first find the changed pixels, then look for which contain colors from the previous photo. This would generally limit the number of comparisons that would need to be done to only changed pixels. If the changed pixels were generally uniformly distributed across the screen, it may be due to a light level adjustment and tracking could be halted for that frame.

Future PlansIt would be very interesting if the algorithm could use the signatures to determine what is moving. For example, using the third algorithm it is easy to tell if a moving object against a white background is an tomato or a lemon, since they have distinct color signatures. Looking at the frequency with which color signatures changes (visual texture), and the shape that a given cluster of edges takes, would permit determining if a moving object was a cat or a car, for example. Lots of interesting stuff to code, some of which I'll be incorporating into two of my iOS apps, EyesBot and EyesBot Watcher.

See AlsoI wrote a related article about visual machine learning and object recognition, which I'm working on integrating into some of my companies EyesBot line of products.

Computer vision

Computer vision

Artificial intelligence

Artificial intelligence

Effecting the physical world

Effecting the physical world